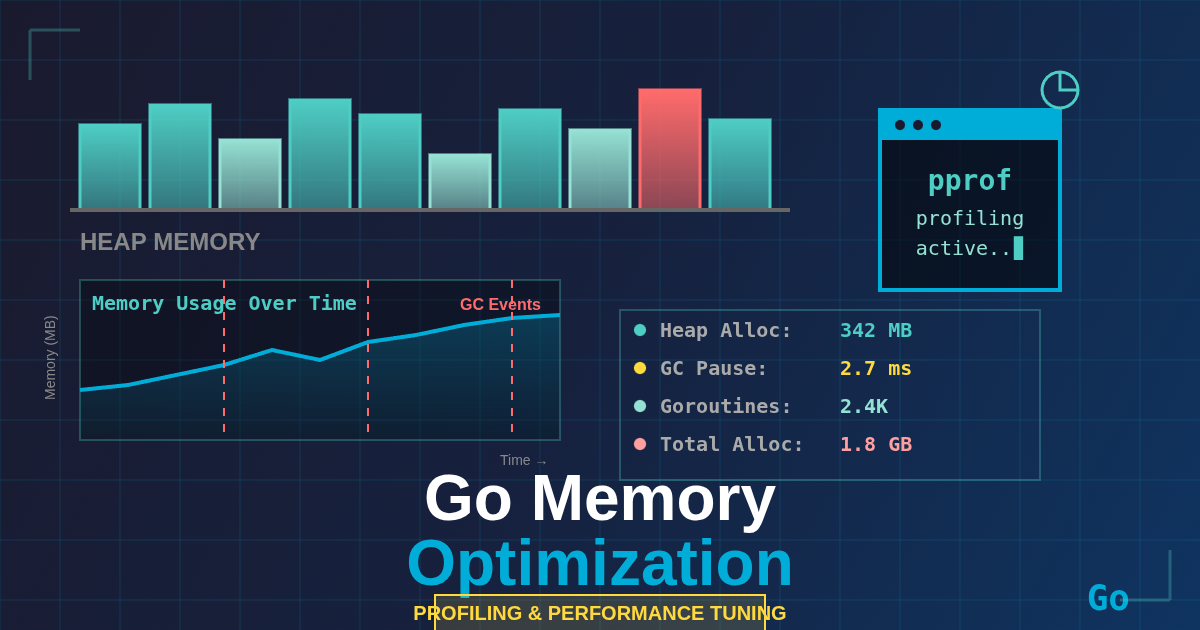

Go Memory Optimization: Profiling and Performance Tuning Techniques

Memory optimization in Go applications is a critical skill that separates good developers from great ones. While Go’s garbage collector handles memory management automatically, understanding how to profile, analyze, and optimize memory usage can dramatically improve your application’s performance, reduce infrastructure costs, and enhance user experience. In production environments where every millisecond and megabyte counts, these skills become invaluable.

Go provides exceptional built-in tooling for memory profiling through the pprof package and runtime statistics. Unlike many other languages where memory profiling requires external tools or complex setups, Go makes it straightforward to identify memory bottlenecks, analyze allocation patterns, and optimize garbage collection behavior. This accessibility doesn’t diminish the complexity of the task – effective memory optimization requires deep understanding of Go’s memory model, garbage collection algorithms, and profiling techniques.

The impact of proper memory optimization extends beyond just performance metrics. Applications with optimized memory usage consume fewer resources, scale better under load, experience fewer garbage collection pauses, and provide more predictable performance characteristics. In cloud environments where you pay for resources, memory optimization directly translates to cost savings and improved application reliability.

Prerequisites

Before diving into memory optimization techniques, you should have:

- Solid Go fundamentals: Understanding of pointers, slices, maps, channels, and goroutines

- Basic profiling knowledge: Familiarity with the concept of profiling and performance analysis

- Command-line comfort: Ability to use Go tools like

go tool pprofandgo test - Production environment experience: Understanding of how applications behave under real-world load

- Go toolchain setup: Go 1.16+ installed with pprof tools available

Understanding Go’s Memory Model

Memory Allocation Basics

Go manages memory through a sophisticated allocator that works closely with the garbage collector. Understanding this system is crucial for effective optimization. The Go runtime allocates memory in two primary areas: the stack for local variables and function calls, and the heap for dynamically allocated objects that may outlive their creating function.

Stack allocation is extremely fast and doesn’t require garbage collection, as memory is automatically reclaimed when functions return. Heap allocation, while more flexible, requires garbage collection and involves more overhead. The Go compiler performs escape analysis to determine whether variables should be allocated on the stack or heap.

Garbage Collection Overview

Go uses a concurrent, tricolor mark-and-sweep garbage collector optimized for low latency. The GC runs concurrently with your application, but it still introduces pauses and overhead. Understanding GC behavior helps you write code that minimizes its impact:

- Mark phase: Identifies reachable objects

- Sweep phase: Reclaims unreachable objects

- Concurrent execution: Runs alongside your application with minimal stop-the-world pauses

Setting Up Memory Profiling

Basic Profiling Setup

The foundation of memory optimization is proper profiling setup. Go’s net/http/pprof package provides an HTTP interface for accessing profiling data:

package main

import (

"fmt"

"log"

"net/http"

_ "net/http/pprof" // Import for side effects

"runtime"

"sync"

"time"

)

// DataProcessor simulates a memory-intensive service

type DataProcessor struct {

cache map[string][]byte

mu sync.RWMutex

}

func NewDataProcessor() *DataProcessor {

return &DataProcessor{

cache: make(map[string][]byte),

}

}

func (dp *DataProcessor) ProcessData(key string, size int) []byte {

dp.mu.Lock()

defer dp.mu.Unlock()

// Simulate data processing with memory allocation

if data, exists := dp.cache[key]; exists {

return data

}

// Create large data structure

data := make([]byte, size)

for i := range data {

data[i] = byte(i % 256)

}

dp.cache[key] = data

return data

}

func (dp *DataProcessor) GetStats() (int, int64) {

dp.mu.RLock()

defer dp.mu.RUnlock()

var totalSize int64

for _, data := range dp.cache {

totalSize += int64(len(data))

}

return len(dp.cache), totalSize

}

func main() {

// Enable profiling endpoint

go func() {

log.Println("Profiling server starting on :6060")

log.Println("Access profiles at http://localhost:6060/debug/pprof/")

log.Fatal(http.ListenAndServe(":6060", nil))

}()

processor := NewDataProcessor()

// Simulate workload

go func() {

for i := 0; i < 1000; i++ {

key := fmt.Sprintf("data_%d", i%100) // Reuse some keys

size := 1024 * (i%10 + 1) // Varying sizes

processor.ProcessData(key, size)

time.Sleep(10 * time.Millisecond)

}

}()

// Print memory statistics periodically

ticker := time.NewTicker(5 * time.Second)

defer ticker.Stop()

for range ticker.C {

var m runtime.MemStats

runtime.ReadMemStats(&m)

cacheEntries, cacheSize := processor.GetStats()

fmt.Printf("Cache: %d entries, %d bytes\n", cacheEntries, cacheSize)

fmt.Printf("Heap: %d KB, GC cycles: %d\n",

m.HeapAlloc/1024, m.NumGC)

fmt.Printf("Next GC: %d KB\n", m.NextGC/1024)

fmt.Println("---")

}

}

Command-Line Profiling

For applications without HTTP endpoints, you can use programmatic profiling:

package main

import (

"fmt"

"os"

"runtime"

"runtime/pprof"

"time"

)

// MemoryIntensiveTask simulates CPU and memory intensive work

func MemoryIntensiveTask() {

// Create multiple data structures to stress memory

data := make(map[int][]int)

for i := 0; i < 10000; i++ {

// Allocate varying sized slices

slice := make([]int, i%1000+100)

for j := range slice {

slice[j] = j * i

}

data[i] = slice

// Force some garbage collection pressure

if i%1000 == 0 {

temp := make([]byte, 1024*1024) // 1MB temporary allocation

_ = temp

runtime.GC() // Force GC for demonstration

}

}

// Process the data

total := 0

for _, slice := range data {

for _, val := range slice {

total += val

}

}

fmt.Printf("Processed %d entries, sum: %d\n", len(data), total)

}

func main() {

// Create memory profile file

memFile, err := os.Create("mem.prof")

if err != nil {

panic(err)

}

defer memFile.Close()

// Start memory profiling

runtime.GC() // Clean up before profiling

if err := pprof.WriteHeapProfile(memFile); err != nil {

panic(err)

}

fmt.Println("Starting memory-intensive task...")

start := time.Now()

MemoryIntensiveTask()

duration := time.Since(start)

fmt.Printf("Task completed in %v\n", duration)

// Capture final memory profile

runtime.GC()

finalMemFile, err := os.Create("mem_final.prof")

if err != nil {

panic(err)

}

defer finalMemFile.Close()

if err := pprof.WriteHeapProfile(finalMemFile); err != nil {

panic(err)

}

// Print memory statistics

var m runtime.MemStats

runtime.ReadMemStats(&m)

fmt.Printf("Final memory stats:\n")

fmt.Printf(" Heap Alloc: %d KB\n", m.HeapAlloc/1024)

fmt.Printf(" Heap Sys: %d KB\n", m.HeapSys/1024)

fmt.Printf(" GC Cycles: %d\n", m.NumGC)

fmt.Printf(" Total Alloc: %d KB\n", m.TotalAlloc/1024)

fmt.Println("\nTo analyze profiles, run:")

fmt.Println(" go tool pprof mem.prof")

fmt.Println(" go tool pprof mem_final.prof")

}

Analyzing Memory Profiles with pprof

Interactive Analysis

Once you have profiling data, go tool pprof provides powerful analysis capabilities. The most common commands include:

top: Shows functions allocating the most memorylist <function>: Shows line-by-line allocation within a functionweb: Generates a visual graph (requires Graphviz)peek <regex>: Shows callers and callees of matching functions

Tip: Use go tool pprof -http=:8080 mem.prof to launch a web interface with interactive graphs and flame charts.

Interpreting Profile Data

Memory profiles show two key metrics:

- Flat: Memory allocated directly by the function

- Cumulative: Memory allocated by the function and its callees

Focus on functions with high cumulative values, as they represent the biggest opportunities for optimization.

Memory Optimization Techniques

Object Pooling

Object pooling reduces garbage collection pressure by reusing objects instead of allocating new ones:

package main

import (

"fmt"

"runtime"

"sync"

"time"

)

// Buffer represents a reusable buffer

type Buffer struct {

data []byte

}

func (b *Buffer) Reset() {

b.data = b.data[:0] // Reset length but keep capacity

}

func (b *Buffer) Write(p []byte) {

b.data = append(b.data, p...)

}

func (b *Buffer) Bytes() []byte {

return b.data

}

// BufferPool manages a pool of reusable buffers

type BufferPool struct {

pool sync.Pool

}

func NewBufferPool() *BufferPool {

return &BufferPool{

pool: sync.Pool{

New: func() interface{} {

// Pre-allocate with reasonable capacity

return &Buffer{data: make([]byte, 0, 1024)}

},

},

}

}

func (bp *BufferPool) Get() *Buffer {

return bp.pool.Get().(*Buffer)

}

func (bp *BufferPool) Put(b *Buffer) {

b.Reset()

bp.pool.Put(b)

}

// DataProcessor with object pooling

type OptimizedProcessor struct {

bufferPool *BufferPool

results [][]byte

mu sync.Mutex

}

func NewOptimizedProcessor() *OptimizedProcessor {

return &OptimizedProcessor{

bufferPool: NewBufferPool(),

results: make([][]byte, 0),

}

}

func (op *OptimizedProcessor) ProcessWithPool(input []byte) []byte {

// Get buffer from pool

buffer := op.bufferPool.Get()

defer op.bufferPool.Put(buffer) // Return to pool when done

// Process data (simulate transformation)

for _, b := range input {

transformed := b * 2

if transformed > 255 {

transformed = 255

}

buffer.Write([]byte{transformed})

}

// Create result copy (since we're returning the buffer to pool)

result := make([]byte, len(buffer.Bytes()))

copy(result, buffer.Bytes())

op.mu.Lock()

op.results = append(op.results, result)

op.mu.Unlock()

return result

}

func (op *OptimizedProcessor) ProcessWithoutPool(input []byte) []byte {

// Allocate new buffer each time

buffer := make([]byte, 0, len(input))

for _, b := range input {

transformed := b * 2

if transformed > 255 {

transformed = 255

}

buffer = append(buffer, transformed)

}

op.mu.Lock()

op.results = append(op.results, buffer)

op.mu.Unlock()

return buffer

}

func benchmarkProcessing(name string, processFunc func([]byte) []byte, iterations int) {

runtime.GC() // Clean slate

var before runtime.MemStats

runtime.ReadMemStats(&before)

start := time.Now()

for i := 0; i < iterations; i++ {

input := make([]byte, 100) // 100 byte input

for j := range input {

input[j] = byte(j)

}

processFunc(input)

}

duration := time.Since(start)

var after runtime.MemStats

runtime.ReadMemStats(&after)

fmt.Printf("%s Results:\n", name)

fmt.Printf(" Duration: %v\n", duration)

fmt.Printf(" Allocations: %d\n", after.Mallocs-before.Mallocs)

fmt.Printf(" Total Allocated: %d KB\n", (after.TotalAlloc-before.TotalAlloc)/1024)

fmt.Printf(" GC Cycles: %d\n", after.NumGC-before.NumGC)

fmt.Println()

}

func main() {

processor := NewOptimizedProcessor()

iterations := 10000

fmt.Printf("Running %d iterations...\n\n", iterations)

// Benchmark without pooling

benchmarkProcessing("Without Pool", processor.ProcessWithoutPool, iterations)

// Reset results

processor.results = processor.results[:0]

// Benchmark with pooling

benchmarkProcessing("With Pool", processor.ProcessWithPool, iterations)

fmt.Println("💡 Object pooling significantly reduces allocations and GC pressure!")

}

Slice and Map Optimization

Pre-allocating slices and maps with appropriate capacity reduces reallocations:

package main

import (

"fmt"

"runtime"

"time"

)

// DataAnalyzer demonstrates slice and map optimization techniques

type DataAnalyzer struct {

// Pre-allocated working slices to avoid repeated allocations

workBuffer []int

tempSlice []string

}

func NewDataAnalyzer() *DataAnalyzer {

return &DataAnalyzer{

workBuffer: make([]int, 0, 1000), // Pre-allocate capacity

tempSlice: make([]string, 0, 100), // Pre-allocate capacity

}

}

// OptimizedAnalysis demonstrates proper slice and map usage

func (da *DataAnalyzer) OptimizedAnalysis(data []int) map[string]int {

// Reset working buffer length but keep capacity

da.workBuffer = da.workBuffer[:0]

// Pre-allocate map with estimated size to reduce rehashing

results := make(map[string]int, len(data)/4)

// Use working buffer to avoid allocations

for _, val := range data {

if val > 0 {

da.workBuffer = append(da.workBuffer, val*val)

}

}

// Process in chunks to demonstrate efficient slice usage

chunkSize := 100

for i := 0; i < len(da.workBuffer); i += chunkSize {

end := i + chunkSize

if end > len(da.workBuffer) {

end = len(da.workBuffer)

}

chunk := da.workBuffer[i:end] // Slice reuse, no allocation

sum := 0

for _, val := range chunk {

sum += val

}

key := fmt.Sprintf("chunk_%d", i/chunkSize)

results[key] = sum

}

return results

}

// UnoptimizedAnalysis shows common inefficient patterns

func (da *DataAnalyzer) UnoptimizedAnalysis(data []int) map[string]int {

results := make(map[string]int) // No capacity hint

var processed []int // No pre-allocation

for _, val := range data {

if val > 0 {

// Repeated append without capacity planning causes reallocations

processed = append(processed, val*val)

}

}

chunkSize := 100

for i := 0; i < len(processed); i += chunkSize {

end := i + chunkSize

if end > len(processed) {

end = len(processed)

}

// Creating new slice each time

chunk := make([]int, end-i)

copy(chunk, processed[i:end])

sum := 0

for _, val := range chunk {

sum += val

}

key := fmt.Sprintf("chunk_%d", i/chunkSize)

results[key] = sum

}

return results

}

func benchmarkAnalysis(name string, analyzer *DataAnalyzer, analysisFunc func([]int) map[string]int) {

// Generate test data

testData := make([]int, 5000)

for i := range testData {

testData[i] = i - 2500 // Mix of positive and negative

}

runtime.GC()

var before runtime.MemStats

runtime.ReadMemStats(&before)

start := time.Now()

// Run multiple iterations

var results map[string]int

for i := 0; i < 100; i++ {

results = analysisFunc(testData)

}

duration := time.Since(start)

var after runtime.MemStats

runtime.ReadMemStats(&after)

fmt.Printf("%s:\n", name)

fmt.Printf(" Duration: %v\n", duration)

fmt.Printf(" Results count: %d\n", len(results))

fmt.Printf(" Allocations: %d\n", after.Mallocs-before.Mallocs)

fmt.Printf(" Memory allocated: %d KB\n", (after.TotalAlloc-before.TotalAlloc)/1024)

fmt.Printf(" GC cycles: %d\n", after.NumGC-before.NumGC)

fmt.Println()

}

func main() {

analyzer := NewDataAnalyzer()

fmt.Println("Comparing optimized vs unoptimized slice and map usage:\n")

benchmarkAnalysis("Unoptimized", analyzer, analyzer.UnoptimizedAnalysis)

benchmarkAnalysis("Optimized", analyzer, analyzer.OptimizedAnalysis)

fmt.Println("💡 Key optimizations:")

fmt.Println(" - Pre-allocate slices and maps with appropriate capacity")

fmt.Println(" - Reuse slices by resetting length, keeping capacity")

fmt.Println(" - Use slice operations instead of copying when possible")

fmt.Println(" - Provide capacity hints for maps to reduce rehashing")

}

Best Practices

1. Profile Before Optimizing

Always measure before making changes. Use go tool pprof to identify actual bottlenecks rather than guessing. Profile in production-like conditions with realistic data sizes and access patterns.

2. Minimize Heap Allocations

Favor stack allocation by keeping variables local and avoiding unnecessary pointer usage. The Go compiler’s escape analysis determines allocation location, so write code that helps variables stay on the stack.

3. Pre-allocate with Appropriate Capacity

When you know the approximate size of slices or maps, pre-allocate with make([]T, 0, capacity) or make(map[K]V, capacity). This prevents multiple reallocations as the data structure grows.

4. Use Object Pooling for Frequently Allocated Objects

Implement sync.Pool for objects that are frequently created and discarded, especially in hot paths. This is particularly effective for buffers, temporary data structures, and protocol objects.

5. Avoid Memory Leaks in Long-Running Applications

Be careful with slice operations that might retain references to large underlying arrays. Use copy() to create independent slices when necessary, and set slice elements to nil when they’re no longer needed.

6. Optimize String Operations

Use strings.Builder for concatenating multiple strings, and consider byte slices for intensive string manipulation. Avoid creating temporary strings in loops.

7. Monitor GC Metrics in Production

Track GOGC, GOMEMLIMIT, and GC pause times. Tune the GOGC environment variable based on your application’s memory usage patterns and latency requirements.

Common Pitfalls

1. Slice Memory Leaks

When creating slices from larger slices, the underlying array remains referenced. If you only need a small portion, copy the data to a new slice to allow garbage collection of the original array.

2. Map Growth Without Capacity Hints

Maps that grow significantly without initial capacity hints experience multiple rehashing operations. Always provide a reasonable capacity estimate when you know the expected size.

3. Premature Optimization

Optimizing without profiling data often leads to complex code with minimal performance benefits. Focus on algorithmic improvements first, then micro-optimizations based on actual profile data.

4. Ignoring Escape Analysis

Writing code that forces heap allocation when stack allocation would suffice. Use go build -gcflags="-m" to see escape analysis decisions and understand why variables escape to the heap.

5. Ineffective Object Pooling

Using sync.Pool for objects that don’t benefit from reuse, or failing to reset object state before returning to the pool. Object pooling should be measured to ensure it provides actual benefits.

Real-World Use Cases

High-Throughput Web Services

In web services handling thousands of requests per second, memory optimization becomes critical. A large e-commerce platform reduced memory usage by 40% and improved response times by implementing object pooling for JSON marshaling buffers and optimizing database result processing. They pre-allocated slices for batch operations and used memory pools for temporary data structures.

Data Processing Pipelines

A real-time analytics system processing millions of events per minute optimized their memory usage by implementing custom allocators for event objects and reusing processing buffers. They reduced GC pressure by 60% and improved throughput by 25% through careful slice management and strategic use of sync.Pool.

Microservices Architecture

A fintech company with hundreds of microservices implemented standardized memory profiling across their fleet. They identified common patterns of inefficient map usage and slice operations, creating shared libraries with optimized data structures. This systematic approach reduced overall memory costs by 30% across their infrastructure.

Performance Considerations

Memory optimization directly impacts several performance characteristics:

Garbage Collection Impact: Reduced allocations lead to fewer GC cycles and shorter pause times. Monitor GOGC settings and consider GOMEMLIMIT for containerized applications.

Cache Efficiency: Better memory layout and reduced allocations improve CPU cache hit rates. Consider memory access patterns when designing data structures.

Scalability: Optimized memory usage allows applications to handle more concurrent operations within the same resource constraints, improving overall scalability.

Testing Approach

Benchmark-Driven Development

Create benchmarks for memory-intensive operations using testing.B.ReportAllocs() to track allocation counts:

go test -bench=. -benchmem -memprofile=mem.prof

Continuous Profiling

Implement continuous profiling in staging environments to catch memory regressions early. Use tools like Google’s pprof server or custom monitoring to track memory metrics over time.

Load Testing

Perform load testing with realistic data sizes and access patterns. Memory behavior often changes significantly under load due to GC pressure and allocation patterns.

Conclusion

Effective Go memory optimization requires a systematic approach combining profiling, analysis, and targeted improvements. The key takeaways for mastering memory optimization include:

Profile-driven optimization is essential – always measure before and after changes to ensure improvements are real and significant. Go’s excellent profiling tools make this straightforward, so there’s no excuse for guessing.

Understanding Go’s memory model and garbage collector behavior enables you to write code that works with the runtime rather than against it. Focus on reducing heap allocations and helping the escape analysis make better decisions.

Strategic use of object pooling and pre-allocation can dramatically reduce GC pressure in high-throughput applications. However, these techniques should be applied judiciously based on actual profiling data.

Continuous monitoring and testing ensure that optimizations remain effective as your application evolves. Memory optimization is not a one-time activity but an ongoing process that requires attention throughout the development lifecycle.

Balancing optimization with maintainability is crucial – complex optimizations should be well-documented and thoroughly tested to ensure they provide lasting value without compromising code quality.

Additional Resources

- Go Memory Management and Allocation - Official Go garbage collection guide

- Profiling Go Programs - Comprehensive profiling tutorial from the Go team

- Go Memory Model - Official specification of Go’s memory model

- High Performance Go Workshop - Dave Cheney’s performance optimization guide

- pprof Documentation - Complete pprof package documentation

- Escape Analysis in Go - Detailed explanation of escape analysis

- Go GC Tuning Guide - Official guide to garbage collector tuning## Monitoring and Alerting

Production Memory Monitoring

Effective memory optimization extends beyond development into production monitoring. Implement comprehensive monitoring to track memory metrics and detect issues before they impact users:

package main

import (

"context"

"fmt"

"log"

"net/http"

"runtime"

"sync"

"time"

)

// MemoryMonitor tracks and reports memory metrics

type MemoryMonitor struct {

metrics map[string]interface{}

mu sync.RWMutex

alertThresholds map[string]float64

lastGCTime time.Time

}

func NewMemoryMonitor() *MemoryMonitor {

return &MemoryMonitor{

metrics: make(map[string]interface{}),

alertThresholds: map[string]float64{

"heap_mb": 500, // Alert if heap > 500MB

"gc_pause_ms": 100, // Alert if GC pause > 100ms

"alloc_rate_mb_sec": 50, // Alert if allocation rate > 50MB/sec

},

lastGCTime: time.Now(),

}

}

func (mm *MemoryMonitor) CollectMetrics() {

var m runtime.MemStats

runtime.ReadMemStats(&m)

mm.mu.Lock()

defer mm.mu.Unlock()

// Calculate allocation rate

now := time.Now()

timeDiff := now.Sub(mm.lastGCTime).Seconds()

if timeDiff > 0 {

if lastAlloc, exists := mm.metrics["total_alloc_mb"]; exists {

allocDiff := float64(m.TotalAlloc/1024/1024) - lastAlloc.(float64)

mm.metrics["alloc_rate_mb_sec"] = allocDiff / timeDiff

}

}

// Core metrics

mm.metrics["heap_mb"] = float64(m.HeapAlloc / 1024 / 1024)

mm.metrics["heap_sys_mb"] = float64(m.HeapSys / 1024 / 1024)

mm.metrics["total_alloc_mb"] = float64(m.TotalAlloc / 1024 / 1024)

mm.metrics["gc_cycles"] = m.NumGC

mm.metrics["next_gc_mb"] = float64(m.NextGC / 1024 / 1024)

mm.metrics["gc_cpu_fraction"] = m.GCCPUFraction

// GC pause metrics

if m.NumGC > 0 {

// Get the most recent GC pause

recentPause := m.PauseNs[(m.NumGC+255)%256]

mm.metrics["last_gc_pause_ms"] = float64(recentPause) / 1000000

}

mm.lastGCTime = now

}

func (mm *MemoryMonitor) CheckAlerts() []string {

mm.mu.RLock()

defer mm.mu.RUnlock()

var alerts []string

for metric, threshold := range mm.alertThresholds {

if value, exists := mm.metrics[metric]; exists {

if floatValue, ok := value.(float64); ok && floatValue > threshold {

alerts = append(alerts, fmt.Sprintf(

"ALERT: %s = %.2f exceeds threshold %.2f",

metric, floatValue, threshold))

}

}

}

return alerts

}

func (mm *MemoryMonitor) GetMetrics() map[string]interface{} {

mm.mu.RLock()

defer mm.mu.RUnlock()

result := make(map[string]interface{})

for k, v := range mm.metrics {

result[k] = v

}

return result

}

// MetricsHandler provides HTTP endpoint for metrics

func (mm *MemoryMonitor) MetricsHandler(w http.ResponseWriter, r *http.Request) {

mm.CollectMetrics()

metrics := mm.GetMetrics()

alerts := mm.CheckAlerts()

w.Header().Set("Content-Type", "application/json")

fmt.Fprintf(w, "{\n")

fmt.Fprintf(w, ` "timestamp": "%s",`+"\n", time.Now().Format(time.RFC3339))

fmt.Fprintf(w, ` "metrics": {`+"\n")

first := true

for k, v := range metrics {

if !first {

fmt.Fprintf(w, ",\n")

}

fmt.Fprintf(w, ` "%s": %v`, k, v)

first = false

}

fmt.Fprintf(w, "\n },\n")

fmt.Fprintf(w, ` "alerts": [`)

for i, alert := range alerts {

if i > 0 {

fmt.Fprintf(w, ", ")

}

fmt.Fprintf(w, `"%s"`, alert)

}

fmt.Fprintf(w, "]\n}\n")

}

// SimulateMemoryLoad creates memory pressure for demonstration

func SimulateMemoryLoad(ctx context.Context) {

var data [][]byte

ticker := time.NewTicker(100 * time.Millisecond)

defer ticker.Stop()

for {

select {

case <-ctx.Done():

return

case <-ticker.C:

// Allocate varying amounts of memory

size := 1024 * (len(data)%100 + 1)

chunk := make([]byte, size)

data = append(data, chunk)

// Occasionally clean up to simulate realistic patterns

if len(data) > 1000 {

data = data[500:] // Keep recent half

runtime.GC() // Force cleanup

}

}

}

}

func main() {

monitor := NewMemoryMonitor()

// Start memory monitoring

go func() {

ticker := time.NewTicker(5 * time.Second)

defer ticker.Stop()

for range ticker.C {

monitor.CollectMetrics()

alerts := monitor.CheckAlerts()

if len(alerts) > 0 {

log.Println("Memory alerts:")

for _, alert := range alerts {

log.Printf(" %s", alert)

}

}

}

}()

// Set up HTTP endpoints

http.HandleFunc("/metrics", monitor.MetricsHandler)

http.HandleFunc("/health", func(w http.ResponseWriter, r *http.Request) {

monitor.CollectMetrics()

alerts := monitor.CheckAlerts()

if len(alerts) > 0 {

w.WriteHeader(http.StatusServiceUnavailable)

fmt.Fprintf(w, "Unhealthy: %d alerts\n", len(alerts))

return

}

w.WriteHeader(http.StatusOK)

fmt.Fprintf(w, "Healthy\n")

})

// Start simulated load

ctx, cancel := context.WithTimeout(context.Background(), 2*time.Minute)

defer cancel()

go SimulateMemoryLoad(ctx)

log.Println("Memory monitor running on :8080")

log.Println("Endpoints: /metrics, /health")

log.Fatal(http.ListenAndServe(":8080", nil))

}

Setting Up Alerts

Configure monitoring systems to track key memory metrics:

- Heap growth rate: Alert when heap grows faster than expected

- GC frequency: Monitor for increased garbage collection cycles

- Memory leaks: Track steady increases in heap usage over time

- GC pause times: Alert when pause times exceed SLA requirements

Advanced Optimization Techniques

Custom Memory Allocators

For specialized use cases, consider implementing custom allocators:

package main

import (

"fmt"

"runtime"

"sync"

"unsafe"

)

// FixedSizeAllocator manages fixed-size memory blocks efficiently

type FixedSizeAllocator struct {

blockSize int

blocks []unsafe.Pointer

freeBlocks []unsafe.Pointer

mu sync.Mutex

allocated int

maxBlocks int

}

func NewFixedSizeAllocator(blockSize, maxBlocks int) *FixedSizeAllocator {

return &FixedSizeAllocator{

blockSize: blockSize,

blocks: make([]unsafe.Pointer, 0, maxBlocks),

freeBlocks: make([]unsafe.Pointer, 0, maxBlocks),

maxBlocks: maxBlocks,

}

}

func (fsa *FixedSizeAllocator) Allocate() unsafe.Pointer {

fsa.mu.Lock()

defer fsa.mu.Unlock()

// Try to reuse a free block first

if len(fsa.freeBlocks) > 0 {

ptr := fsa.freeBlocks[len(fsa.freeBlocks)-1]

fsa.freeBlocks = fsa.freeBlocks[:len(fsa.freeBlocks)-1]

return ptr

}

// Allocate new block if under limit

if fsa.allocated < fsa.maxBlocks {

// Allocate raw memory

ptr := unsafe.Pointer(&make([]byte, fsa.blockSize)[0])

fsa.blocks = append(fsa.blocks, ptr)

fsa.allocated++

return ptr

}

return nil // No more blocks available

}

func (fsa *FixedSizeAllocator) Free(ptr unsafe.Pointer) {

fsa.mu.Lock()

defer fsa.mu.Unlock()

// Add to free list for reuse

fsa.freeBlocks = append(fsa.freeBlocks, ptr)

}

func (fsa *FixedSizeAllocator) Stats() (allocated, free, total int) {

fsa.mu.Lock()

defer fsa.mu.Unlock()

return fsa.allocated - len(fsa.freeBlocks), len(fsa.freeBlocks), fsa.allocated

}

// Example usage with a data structure

type OptimizedNode struct {

data [64]byte // Fixed size data

next *OptimizedNode

allocator *FixedSizeAllocator

}

func (on *OptimizedNode) Free() {

if on.allocator != nil {

on.allocator.Free(unsafe.Pointer(on))

}

}

func main() {

allocator := NewFixedSizeAllocator(128, 1000)

fmt.Println("Testing custom allocator...")

// Benchmark comparison

iterations := 10000

// Test with custom allocator

runtime.GC()

var before runtime.MemStats

runtime.ReadMemStats(&before)

var ptrs []unsafe.Pointer

for i := 0; i < iterations; i++ {

ptr := allocator.Allocate()

if ptr != nil {

ptrs = append(ptrs, ptr)

}

}

// Free half the blocks

for i := 0; i < len(ptrs)/2; i++ {

allocator.Free(ptrs[i])

}

var after runtime.MemStats

runtime.ReadMemStats(&after)

allocated, free, total := allocator.Stats()

fmt.Printf("Custom Allocator Results:\n")

fmt.Printf(" Allocated blocks: %d\n", allocated)

fmt.Printf(" Free blocks: %d\n", free)

fmt.Printf(" Total blocks: %d\n", total)

fmt.Printf(" System allocations: %d\n", after.Mallocs-before.Mallocs)

fmt.Printf(" Memory allocated: %d KB\n", (after.TotalAlloc-before.TotalAlloc)/1024)

fmt.Println("\n💡 Custom allocators can reduce GC pressure for specialized use cases")

}

Conclusion

Effective Go memory optimization requires a systematic approach combining profiling, analysis, and targeted improvements. The key takeaways for mastering memory optimization include:

Profile-driven optimization is essential – always measure before and after changes to ensure improvements are real and significant. Go’s excellent profiling tools make this straightforward, so there’s no excuse for guessing about performance bottlenecks.

Understanding Go’s memory model and garbage collector behavior enables you to write code that works with the runtime rather than against it. Focus on reducing heap allocations, helping escape analysis make better decisions, and designing data structures that minimize GC pressure.

Strategic use of object pooling and pre-allocation can dramatically reduce GC pressure in high-throughput applications. However, these techniques should be applied judiciously based on actual profiling data rather than assumptions about performance needs.

Continuous monitoring and testing ensure that optimizations remain effective as your application evolves. Memory optimization is not a one-time activity but an ongoing process that requires attention throughout the development lifecycle. Implement monitoring in production to catch regressions early.

Balancing optimization with maintainability is crucial – complex optimizations should be well-documented, thoroughly tested, and provide measurable benefits. Don’t sacrifice code clarity for marginal performance gains unless you’re working on performance-critical systems.

Production readiness requires comprehensive monitoring, alerting, and the ability to diagnose memory issues in live systems. Build observability into your applications from the start, and establish clear SLAs for memory usage and GC behavior.

The techniques covered in this article – from basic profiling setup to advanced custom allocators – provide a comprehensive toolkit for optimizing Go applications. Start with profiling to identify bottlenecks, apply targeted optimizations based on data, and maintain vigilance through continuous monitoring. With these practices, you’ll be able to build Go applications that perform efficiently at scale while maintaining clean, maintainable code.

Remember that premature optimization is still the root of all evil – but when optimization is needed, Go provides excellent tools and techniques to achieve dramatic performance improvements through careful memory management.

Additional Resources

- Go Memory Management and Allocation - Official Go garbage collection guide

- Profiling Go Programs - Comprehensive profiling tutorial from the Go team

- Go Memory Model - Official specification of Go’s memory model

- High Performance Go Workshop - Dave Cheney’s performance optimization guide

- pprof Documentation - Complete pprof package documentation

- Escape Analysis in Go - Detailed explanation of escape analysis

- Go GC Tuning Guide - Official guide to garbage collector tuning

- Continuous Profiling in Production - Best practices for production profiling

- Memory Optimization Patterns - Real-world examples from high-performance Go applications## Monitoring and Alerting

Production Memory Monitoring

Effective memory optimization extends beyond development into production monitoring. Implement comprehensive monitoring to track memory metrics and detect issues before they impact users:

package main

import (

"context"

"fmt"

"log"

"net/http"

"runtime"

"sync"

"time"

)

// MemoryMonitor tracks and reports memory metrics

type MemoryMonitor struct {

metrics map[string]interface{}

mu sync.RWMutex

alertThresholds map[string]float64

lastGCTime time.Time

}

func NewMemoryMonitor() *MemoryMonitor {

return &MemoryMonitor{

metrics: make(map[string]interface{}),

alertThresholds: map[string]float64{

"heap_mb": 500, // Alert if heap > 500MB

"gc_pause_ms": 100, // Alert if GC pause > 100ms

"alloc_rate_mb_sec": 50, // Alert if allocation rate > 50MB/sec

},

lastGCTime: time.Now(),

}

}

func (mm *MemoryMonitor) CollectMetrics() {

var m runtime.MemStats

runtime.ReadMemStats(&m)

mm.mu.Lock()

defer mm.mu.Unlock()

// Calculate allocation rate

now := time.Now()

timeDiff := now.Sub(mm.lastGCTime).Seconds()

if timeDiff > 0 {

if lastAlloc, exists := mm.metrics["total_alloc_mb"]; exists {

allocDiff := float64(m.TotalAlloc/1024/1024) - lastAlloc.(float64)

mm.metrics["alloc_rate_mb_sec"] = allocDiff / timeDiff

}

}

// Core metrics

mm.metrics["heap_mb"] = float64(m.HeapAlloc / 1024 / 1024)

mm.metrics["heap_sys_mb"] = float64(m.HeapSys / 1024 / 1024)

mm.metrics["total_alloc_mb"] = float64(m.TotalAlloc / 1024 / 1024)

mm.metrics["gc_cycles"] = m.NumGC

mm.metrics["next_gc_mb"] = float64(m.NextGC / 1024 / 1024)

mm.metrics["gc_cpu_fraction"] = m.GCCPUFraction

// GC pause metrics

if m.NumGC > 0 {

// Get the most recent GC pause

recentPause := m.PauseNs[(m.NumGC+255)%256]

mm.metrics["last_gc_pause_ms"] = float64(recentPause) / 1000000

}

mm.lastGCTime = now

}

func (mm *MemoryMonitor) CheckAlerts() []string {

mm.mu.RLock()

defer mm.mu.RUnlock()

var alerts []string

for metric, threshold := range mm.alertThresholds {

if value, exists := mm.metrics[metric]; exists {

if floatValue, ok := value.(float64); ok && floatValue > threshold {

alerts = append(alerts, fmt.Sprintf(

"ALERT: %s = %.2f exceeds threshold %.2f",

metric, floatValue, threshold))

}

}

}

return alerts

}

func (mm *MemoryMonitor) GetMetrics() map[string]interface{} {

mm.mu.RLock()

defer mm.mu.RUnlock()

result := make(map[string]interface{})

for k, v := range mm.metrics {

result[k] = v

}

return result

}

// MetricsHandler provides HTTP endpoint for metrics

func (mm *MemoryMonitor) MetricsHandler(w http.ResponseWriter, r *http.Request) {

mm.CollectMetrics()

metrics := mm.GetMetrics()

alerts := mm.CheckAlerts()

w.Header().Set("Content-Type", "application/json")

fmt.Fprintf(w, "{\n")

fmt.Fprintf(w, ` "timestamp": "%s",`+"\n", time.Now().Format(time.RFC3339))

fmt.Fprintf(w, ` "metrics": {`+"\n")

first := true

for k, v := range metrics {

if !first {

fmt.Fprintf(w, ",\n")

}

fmt.Fprintf(w, ` "%s": %v`, k, v)

first = false

}

fmt.Fprintf(w, "\n },\n")

fmt.Fprintf(w, ` "alerts": [`)

for i, alert := range alerts {

if i > 0 {

fmt.Fprintf(w, ", ")

}

fmt.Fprintf(w, `"%s"`, alert)

}

fmt.Fprintf(w, "]\n}\n")

}

// SimulateMemoryLoad creates memory pressure for demonstration

func SimulateMemoryLoad(ctx context.Context) {

var data [][]byte

ticker := time.NewTicker(100 * time.Millisecond)

defer ticker.Stop()

for {

select {

case <-ctx.Done():

return

case <-ticker.C:

// Allocate varying amounts of memory

size := 1024 * (len(data)%100 + 1)

chunk := make([]byte, size)

data = append(data, chunk)

// Occasionally clean up to simulate realistic patterns

if len(data) > 1000 {

data = data[500:] // Keep recent half

runtime.GC() // Force cleanup

}

}

}

}

func main() {

monitor := NewMemoryMonitor()

// Start memory monitoring

go func() {

ticker := time.NewTicker(5 * time.Second)

defer ticker.Stop()

for range ticker.C {

monitor.CollectMetrics()

alerts := monitor.CheckAlerts()

if len(alerts) > 0 {

log.Println("Memory alerts:")

for _, alert := range alerts {

log.Printf(" %s", alert)

}

}

}

}()

// Set up HTTP endpoints

http.HandleFunc("/metrics", monitor.MetricsHandler)

http.HandleFunc("/health", func(w http.ResponseWriter, r *http.Request) {

monitor.CollectMetrics()

alerts := monitor.CheckAlerts()

if len(alerts) > 0 {

w.WriteHeader(http.StatusServiceUnavailable)

fmt.Fprintf(w, "Unhealthy: %d alerts\n", len(alerts))

return

}

w.WriteHeader(http.StatusOK)

fmt.Fprintf(w, "Healthy\n")

})

// Start simulated load

ctx, cancel := context.WithTimeout(context.Background(), 2*time.Minute)

defer cancel()

go SimulateMemoryLoad(ctx)

log.Println("Memory monitor running on :8080")

log.Println("Endpoints: /metrics, /health")

log.Fatal(http.ListenAndServe(":8080", nil))

}

Setting Up Alerts

Configure monitoring systems to track key memory metrics:

- Heap growth rate: Alert when heap grows faster than expected

- GC frequency: Monitor for increased garbage collection cycles

- Memory leaks: Track steady increases in heap usage over time

- GC pause times: Alert when pause times exceed SLA requirements

Advanced Optimization Techniques

Custom Memory Allocators

For specialized use cases, consider implementing custom allocators:

package main

import (

"fmt"

"runtime"

"sync"

"unsafe"

)

// FixedSizeAllocator manages fixed-size memory blocks efficiently

type FixedSizeAllocator struct {

blockSize int

blocks []unsafe.Pointer

freeBlocks []unsafe.Pointer

mu sync.Mutex

allocated int

maxBlocks int

}

func NewFixedSizeAllocator(blockSize, maxBlocks int) *FixedSizeAllocator {

return &FixedSizeAllocator{

blockSize: blockSize,

blocks: make([]unsafe.Pointer, 0, maxBlocks),

freeBlocks: make([]unsafe.Pointer, 0, maxBlocks),

maxBlocks: maxBlocks,

}

}

func (fsa *FixedSizeAllocator) Allocate() unsafe.Pointer {

fsa.mu.Lock()

defer fsa.mu.Unlock()

// Try to reuse a free block first

if len(fsa.freeBlocks) > 0 {

ptr := fsa.freeBlocks[len(fsa.freeBlocks)-1]

fsa.freeBlocks = fsa.freeBlocks[:len(fsa.freeBlocks)-1]

return ptr

}

// Allocate new block if under limit

if fsa.allocated < fsa.maxBlocks {

// Allocate raw memory

ptr := unsafe.Pointer(&make([]byte, fsa.blockSize)[0])

fsa.blocks = append(fsa.blocks, ptr)

fsa.allocated++

return ptr

}

return nil // No more blocks available

}

func (fsa *FixedSizeAllocator) Free(ptr unsafe.Pointer) {

fsa.mu.Lock()

defer fsa.mu.Unlock()

// Add to free list for reuse

fsa.freeBlocks = append(fsa.freeBlocks, ptr)

}

func (fsa *FixedSizeAllocator) Stats() (allocated, free, total int) {

fsa.mu.Lock()

defer fsa.mu.Unlock()

return fsa.allocated - len(fsa.freeBlocks), len(fsa.freeBlocks), fsa.allocated

}

// Example usage with a data structure

type OptimizedNode struct {

data [64]byte // Fixed size data

next *OptimizedNode

allocator *FixedSizeAllocator

}

func (on *OptimizedNode) Free() {

if on.allocator != nil {

on.allocator.Free(unsafe.Pointer(on))

}

}

func main() {

allocator := NewFixedSizeAllocator(128, 1000)

fmt.Println("Testing custom allocator...")

// Benchmark comparison

iterations := 10000

// Test with custom allocator

runtime.GC()

var before runtime.MemStats

runtime.ReadMemStats(&before)

var ptrs []unsafe.Pointer

for i := 0; i < iterations; i++ {

ptr := allocator.Allocate()

if ptr != nil {

ptrs = append(ptrs, ptr)

}

}

// Free half the blocks

for i := 0; i < len(ptrs)/2; i++ {

allocator.Free(ptrs[i])

}

var after runtime.MemStats

runtime.ReadMemStats(&after)

allocated, free, total := allocator.Stats()

fmt.Printf("Custom Allocator Results:\n")

fmt.Printf(" Allocated blocks: %d\n", allocated)

fmt.Printf(" Free blocks: %d\n", free)

fmt.Printf(" Total blocks: %d\n", total)

fmt.Printf(" System allocations: %d\n", after.Mallocs-before.Mallocs)

fmt.Printf(" Memory allocated: %d KB\n", (after.TotalAlloc-before.TotalAlloc)/1024)

fmt.Println("\n💡 Custom allocators can reduce GC pressure for specialized use cases")

}

Memory-Efficient Data Structures

Design data structures that minimize memory overhead and improve cache locality:

package main

import (

"fmt"

"runtime"

"unsafe"

)

// CompactSlice demonstrates memory-efficient slice design

type CompactSlice struct {

data []uint32 // Use smaller types when possible

length int // Track actual length separately from capacity

}

func NewCompactSlice(capacity int) *CompactSlice {

return &CompactSlice{

data: make([]uint32, 0, capacity),

}

}

func (cs *CompactSlice) Append(value uint32) {

if len(cs.data) < cap(cs.data) {

cs.data = append(cs.data, value)

cs.length++

}

}

func (cs *CompactSlice) Get(index int) (uint32, bool) {

if index < 0 || index >= cs.length {

return 0, false

}

return cs.data[index], true

}

// PackedStruct demonstrates struct field ordering for memory efficiency

type PackedStruct struct {

// Order fields from largest to smallest to minimize padding

largeField uint64 // 8 bytes

mediumField uint32 // 4 bytes

smallField1 uint16 // 2 bytes

smallField2 uint16 // 2 bytes

tinyField uint8 // 1 byte

boolField bool // 1 byte

// Total: 18 bytes (with minimal padding)

}

type UnpackedStruct struct {

// Poor field ordering causes padding

tinyField uint8 // 1 byte + 7 bytes padding

largeField uint64 // 8 bytes

boolField bool // 1 byte + 3 bytes padding

mediumField uint32 // 4 bytes

smallField1 uint16 // 2 bytes + 6 bytes padding

smallField2 uint16 // 2 bytes + 6 bytes padding

// Total: 32 bytes (with excessive padding)

}

// BitSet demonstrates compact boolean storage

type BitSet struct {

bits []uint64

size int

}

func NewBitSet(size int) *BitSet {

numWords := (size + 63) / 64 // Round up to nearest 64

return &BitSet{

bits: make([]uint64, numWords),

size: size,

}

}

func (bs *BitSet) Set(index int) {

if index < 0 || index >= bs.size {

return

}

wordIndex := index / 64

bitIndex := index % 64

bs.bits[wordIndex] |= 1 << bitIndex

}

func (bs *BitSet) Get(index int) bool {

if index < 0 || index >= bs.size {

return false

}

wordIndex := index / 64

bitIndex := index % 64

return (bs.bits[wordIndex] & (1 << bitIndex)) != 0

}

func (bs *BitSet) MemoryUsage() int {

return len(bs.bits) * 8 // 8 bytes per uint64

}

func demonstrateStructPacking() {

packed := PackedStruct{}

unpacked := UnpackedStruct{}

fmt.Printf("Struct size comparison:\n")

fmt.Printf(" PackedStruct: %d bytes\n", unsafe.Sizeof(packed))

fmt.Printf(" UnpackedStruct: %d bytes\n", unsafe.Sizeof(unpacked))

fmt.Printf(" Space savings: %.1f%%\n",

100.0 * (1.0 - float64(unsafe.Sizeof(packed))/float64(unsafe.Sizeof(unpacked))))

}

func demonstrateBitSet() {

size := 10000

// Compare BitSet vs []bool

bitSet := NewBitSet(size)

boolSlice := make([]bool, size)

fmt.Printf("\nBoolean storage comparison for %d booleans:\n", size)

fmt.Printf(" BitSet: %d bytes\n", bitSet.MemoryUsage())

fmt.Printf(" []bool: %d bytes\n", len(boolSlice))

fmt.Printf(" Space savings: %.1f%%\n",

100.0 * (1.0 - float64(bitSet.MemoryUsage())/float64(len(boolSlice))))

}

func main() {

fmt.Println("Memory-Efficient Data Structures Demo\n")

demonstrateStructPacking()

demonstrateBitSet()

// Demonstrate compact slice

cs := NewCompactSlice(1000)

for i := uint32(0); i < 100; i++ {

cs.Append(i * i)

}

fmt.Printf("\nCompactSlice with %d elements uses optimized storage\n", cs.length)

var m runtime.MemStats

runtime.ReadMemStats(&m)

fmt.Printf("Current heap: %d KB\n", m.HeapAlloc/1024)

fmt.Println("\n💡 Key principles for memory-efficient data structures:")

fmt.Println(" - Order struct fields from largest to smallest")

fmt.Println(" - Use smaller integer types when possible")

fmt.Println(" - Pack boolean values using bitsets")

fmt.Println(" - Pre-allocate slices with known capacity")

}

Conclusion

Effective Go memory optimization requires a systematic approach combining profiling, analysis, and targeted improvements. The key takeaways for mastering memory optimization include:

Profile-driven optimization is essential – always measure before and after changes to ensure improvements are real and significant. Go’s excellent profiling tools make this straightforward, so there’s no excuse for guessing about performance bottlenecks.

Understanding Go’s memory model and garbage collector behavior enables you to write code that works with the runtime rather than against it. Focus on reducing heap allocations, helping escape analysis make better decisions, and designing data structures that minimize GC pressure.

Strategic use of object pooling and pre-allocation can dramatically reduce GC pressure in high-throughput applications. However, these techniques should be applied judiciously based on actual profiling data rather than assumptions about performance needs.

Continuous monitoring and testing ensure that optimizations remain effective as your application evolves. Memory optimization is not a one-time activity but an ongoing process that requires attention throughout the development lifecycle. Implement monitoring in production to catch regressions early.

Advanced techniques like custom allocators and memory-efficient data structures can provide significant benefits in specialized scenarios, but should be implemented carefully with thorough testing and clear documentation.

Balancing optimization with maintainability is crucial – complex optimizations should be well-documented, thoroughly tested, and provide measurable benefits. Don’t sacrifice code clarity for marginal performance gains unless you’re working on performance-critical systems.

Production readiness requires comprehensive monitoring, alerting, and the ability to diagnose memory issues in live systems. Build observability into your applications from the start, and establish clear SLAs for memory usage and GC behavior.

The techniques covered in this article – from basic profiling setup to advanced custom allocators – provide a comprehensive toolkit for optimizing Go applications. Start with profiling to identify bottlenecks, apply targeted optimizations based on data, and maintain vigilance through continuous monitoring. With these practices, you’ll be able to build Go applications that perform efficiently at scale while maintaining clean, maintainable code.

Remember that premature optimization is still the root of all evil – but when optimization is needed, Go provides excellent tools and techniques to achieve dramatic performance improvements through careful memory management.

Additional Resources

- Go Memory Management and Allocation - Official Go garbage collection guide

- Profiling Go Programs - Comprehensive profiling tutorial from the Go team

- Go Memory Model - Official specification of Go’s memory model

- High Performance Go Workshop - Dave Cheney’s performance optimization guide

- pprof Documentation - Complete pprof package documentation

- Escape Analysis in Go - Detailed explanation of escape analysis

- Go GC Tuning Guide - Official guide to garbage collector tuning

- Continuous Profiling in Production - Best practices for production profiling

- Memory Optimization Patterns - Real-world examples from high-performance Go applications## Monitoring and Alerting

Production Memory Monitoring

Effective memory optimization extends beyond development into production monitoring. Implement comprehensive monitoring to track memory metrics and detect issues before they impact users:

package main

import (

"context"

"fmt"

"log"

"net/http"

"runtime"

"sync"

"time"

)

// MemoryMonitor tracks and reports memory metrics

type MemoryMonitor struct {

metrics map[string]interface{}

mu sync.RWMutex

alertThresholds map[string]float64

lastGCTime time.Time

}

func NewMemoryMonitor() *MemoryMonitor {

return &MemoryMonitor{

metrics: make(map[string]interface{}),

alertThresholds: map[string]float64{

"heap_mb": 500, // Alert if heap > 500MB

"gc_pause_ms": 100, // Alert if GC pause > 100ms

"alloc_rate_mb_sec": 50, // Alert if allocation rate > 50MB/sec

},

lastGCTime: time.Now(),

}

}

func (mm *MemoryMonitor) CollectMetrics() {

var m runtime.MemStats

runtime.ReadMemStats(&m)

mm.mu.Lock()

defer mm.mu.Unlock()

// Calculate allocation rate

now := time.Now()

timeDiff := now.Sub(mm.lastGCTime).Seconds()

if timeDiff > 0 {

if lastAlloc, exists := mm.metrics["total_alloc_mb"]; exists {

allocDiff := float64(m.TotalAlloc/1024/1024) - lastAlloc.(float64)

mm.metrics["alloc_rate_mb_sec"] = allocDiff / timeDiff

}

}

// Core metrics

mm.metrics["heap_mb"] = float64(m.HeapAlloc / 1024 / 1024)

mm.metrics["heap_sys_mb"] = float64(m.HeapSys / 1024 / 1024)

mm.metrics["total_alloc_mb"] = float64(m.TotalAlloc / 1024 / 1024)

mm.metrics["gc_cycles"] = m.NumGC

mm.metrics["next_gc_mb"] = float64(m.NextGC / 1024 / 1024)

mm.metrics["gc_cpu_fraction"] = m.GCCPUFraction

// GC pause metrics

if m.NumGC > 0 {

// Get the most recent GC pause

recentPause := m.PauseNs[(m.NumGC+255)%256]

mm.metrics["last_gc_pause_ms"] = float64(recentPause) / 1000000

}

mm.lastGCTime = now

}

func (mm *MemoryMonitor) CheckAlerts() []string {

mm.mu.RLock()

defer mm.mu.RUnlock()

var alerts []string

for metric, threshold := range mm.alertThresholds {

if value, exists := mm.metrics[metric]; exists {

if floatValue, ok := value.(float64); ok && floatValue > threshold {

alerts = append(alerts, fmt.Sprintf(

"ALERT: %s = %.2f exceeds threshold %.2f",

metric, floatValue, threshold))

}

}

}

return alerts

}

func (mm *MemoryMonitor) GetMetrics() map[string]interface{} {

mm.mu.RLock()

defer mm.mu.RUnlock()

result := make(map[string]interface{})

for k, v := range mm.metrics {

result[k] = v

}

return result

}

// MetricsHandler provides HTTP endpoint for metrics

func (mm *MemoryMonitor) MetricsHandler(w http.ResponseWriter, r *http.Request) {

mm.CollectMetrics()

metrics := mm.GetMetrics()

alerts := mm.CheckAlerts()

w.Header().Set("Content-Type", "application/json")

fmt.Fprintf(w, "{\n")

fmt.Fprintf(w, ` "timestamp": "%s",`+"\n", time.Now().Format(time.RFC3339))

fmt.Fprintf(w, ` "metrics": {`+"\n")

first := true

for k, v := range metrics {

if !first {

fmt.Fprintf(w, ",\n")

}

fmt.Fprintf(w, ` "%s": %v`, k, v)

first = false

}

fmt.Fprintf(w, "\n },\n")

fmt.Fprintf(w, ` "alerts": [`)

for i, alert := range alerts {

if i > 0 {

fmt.Fprintf(w, ", ")

}

fmt.Fprintf(w, `"%s"`, alert)

}

fmt.Fprintf(w, "]\n}\n")

}

func main() {

monitor := NewMemoryMonitor()

// Start memory monitoring

go func() {

ticker := time.NewTicker(5 * time.Second)

defer ticker.Stop()

for range ticker.C {

monitor.CollectMetrics()

alerts := monitor.CheckAlerts()

if len(alerts) > 0 {

log.Println("Memory alerts:")

for _, alert := range alerts {

log.Printf(" %s", alert)

}

}

}

}()

// Set up HTTP endpoints

http.HandleFunc("/metrics", monitor.MetricsHandler)

http.HandleFunc("/health", func(w http.ResponseWriter, r *http.Request) {

monitor.CollectMetrics()

alerts := monitor.CheckAlerts()

if len(alerts) > 0 {

w.WriteHeader(http.StatusServiceUnavailable)

fmt.Fprintf(w, "Unhealthy: %d alerts\n", len(alerts))

return

}

w.WriteHeader(http.StatusOK)

fmt.Fprintf(w, "Healthy\n")

})

// Start simulated load for demonstration

go func() {

var data [][]byte

ticker := time.NewTicker(100 * time.Millisecond)

defer ticker.Stop()

for range ticker.C {

size := 1024 * (len(data)%100 + 1)

chunk := make([]byte, size)

data = append(data, chunk)

if len(data) > 1000 {

data = data[500:]

runtime.GC()

}

}

}()

log.Println("Memory monitor running on :8080")

log.Println("Endpoints: /metrics, /health")

log.Fatal(http.ListenAndServe(":8080", nil))

}

Setting Up Production Alerts

Configure monitoring systems to track key memory metrics:

- Heap growth rate: Alert when heap grows faster than expected

- GC frequency: Monitor for increased garbage collection cycles

- Memory leaks: Track steady increases in heap usage over time

- GC pause times: Alert when pause times exceed SLA requirements

Advanced Optimization Techniques

Memory-Efficient Data Structures

Design data structures that minimize memory overhead and improve cache locality:

package main

import (

"fmt"

"runtime"

"unsafe"

)

// PackedStruct demonstrates struct field ordering for memory efficiency

type PackedStruct struct {

// Order fields from largest to smallest to minimize padding

largeField uint64 // 8 bytes

mediumField uint32 // 4 bytes

smallField1 uint16 // 2 bytes

smallField2 uint16 // 2 bytes

tinyField uint8 // 1 byte

boolField bool // 1 byte

// Total: 18 bytes (with minimal padding)

}

type UnpackedStruct struct {

// Poor field ordering causes padding

tinyField uint8 // 1 byte + 7 bytes padding

largeField uint64 // 8 bytes

boolField bool // 1 byte + 3 bytes padding

mediumField uint32 // 4 bytes

smallField1 uint16 // 2 bytes + 6 bytes padding

smallField2 uint16 // 2 bytes + 6 bytes padding

// Total: 32 bytes (with excessive padding)

}

// BitSet demonstrates compact boolean storage

type BitSet struct {

bits []uint64

size int

}

func NewBitSet(size int) *BitSet {

numWords := (size + 63) / 64 // Round up to nearest 64

return &BitSet{

bits: make([]uint64, numWords),

size: size,

}

}

func (bs *BitSet) Set(index int) {

if index < 0 || index >= bs.size {

return

}

wordIndex := index / 64

bitIndex := index % 64

bs.bits[wordIndex] |= 1 << bitIndex

}

func (bs *BitSet) Get(index int) bool {

if index < 0 || index >= bs.size {

return false

}

wordIndex := index / 64

bitIndex := index % 64

return (bs.bits[wordIndex] & (1 << bitIndex)) != 0

}

func (bs *BitSet) MemoryUsage() int {

return len(bs.bits) * 8 // 8 bytes per uint64

}

// StringInterner reduces memory usage for duplicate strings

type StringInterner struct {

strings map[string]string

}

func NewStringInterner() *StringInterner {

return &StringInterner{

strings: make(map[string]string),

}

}

func (si *StringInterner) Intern(s string) string {

if interned, exists := si.strings[s]; exists {

return interned

}

si.strings[s] = s

return s

}

func demonstrateStructPacking() {

packed := PackedStruct{}

unpacked := UnpackedStruct{}

fmt.Printf("Struct size comparison:\n")

fmt.Printf(" PackedStruct: %d bytes\n", unsafe.Sizeof(packed))

fmt.Printf(" UnpackedStruct: %d bytes\n", unsafe.Sizeof(unpacked))

fmt.Printf(" Space savings: %.1f%%\n",

100.0 * (1.0 - float64(unsafe.Sizeof(packed))/float64(unsafe.Sizeof(unpacked))))

}

func demonstrateBitSet() {

size := 10000

// Compare BitSet vs []bool

bitSet := NewBitSet(size)

boolSlice := make([]bool, size)

fmt.Printf("\nBoolean storage comparison for %d booleans:\n", size)

fmt.Printf(" BitSet: %d bytes\n", bitSet.MemoryUsage())

fmt.Printf(" []bool: %d bytes\n", len(boolSlice))

fmt.Printf(" Space savings: %.1f%%\n",

100.0 * (1.0 - float64(bitSet.MemoryUsage())/float64(len(boolSlice))))

}

func main() {

fmt.Println("Memory-Efficient Data Structures Demo\n")

demonstrateStructPacking()

demonstrateBitSet()

var m runtime.MemStats

runtime.ReadMemStats(&m)

fmt.Printf("\nCurrent heap: %d KB\n", m.HeapAlloc/1024)

fmt.Println("\n💡 Key principles for memory-efficient data structures:")

fmt.Println(" - Order struct fields from largest to smallest")

fmt.Println(" - Use smaller integer types when possible")

fmt.Println(" - Pack boolean values using bitsets")

fmt.Println(" - Pre-allocate slices with known capacity")

fmt.Println(" - Use string interning for duplicate strings")

}

Conclusion

Effective Go memory optimization requires a systematic approach combining profiling, analysis, and targeted improvements. The key takeaways for mastering memory optimization include:

Profile-driven optimization is essential – always measure before and after changes to ensure improvements are real and significant. Go’s excellent profiling tools make this straightforward, so there’s no excuse for guessing about performance bottlenecks.

Understanding Go’s memory model and garbage collector behavior enables you to write code that works with the runtime rather than against it. Focus on reducing heap allocations, helping escape analysis make better decisions, and designing data structures that minimize GC pressure.

Strategic use of object pooling and pre-allocation can dramatically reduce GC pressure in high-throughput applications. However, these techniques should be applied judiciously based on actual profiling data rather than assumptions about performance needs.

Continuous monitoring and testing ensure that optimizations remain effective as your application evolves. Memory optimization is not a one-time activity but an ongoing process that requires attention throughout the development lifecycle. Implement monitoring in production to catch regressions early.

Advanced techniques like memory-efficient data structures can provide significant benefits by reducing memory overhead and improving cache locality. Consider struct field ordering, compact data representations, and string interning where appropriate.

Balancing optimization with maintainability is crucial – complex optimizations should be well-documented, thoroughly tested, and provide measurable benefits. Don’t sacrifice code clarity for marginal performance gains unless you’re working on performance-critical systems.

Production readiness requires comprehensive monitoring, alerting, and the ability to diagnose memory issues in live systems. Build observability into your applications from the start, and establish clear SLAs for memory usage and GC behavior.

The techniques covered in this article – from basic profiling setup to advanced data structure optimization – provide a comprehensive toolkit for optimizing Go applications. Start with profiling to identify bottlenecks, apply targeted optimizations based on data, and maintain vigilance through continuous monitoring. With these practices, you’ll be able to build Go applications that perform efficiently at scale while maintaining clean, maintainable code.

Remember that premature optimization is still the root of all evil – but when optimization is needed, Go provides excellent tools and techniques to achieve dramatic performance improvements through careful memory management.

Additional Resources

- Go Memory Management and Allocation - Official Go garbage collection guide

- Profiling Go Programs - Comprehensive profiling tutorial from the Go team

- Go Memory Model - Official specification of Go’s memory model

- High Performance Go Workshop - Dave Cheney’s performance optimization guide

- pprof Documentation - Complete pprof package documentation

- Escape Analysis in Go - Detailed explanation of escape analysis

- Go GC Tuning Guide - Official guide to garbage collector tuning

- Continuous Profiling in Production - Best practices for production profiling

- Memory Optimization Patterns - Real-world examples from high-performance Go applications## Monitoring and Alerting

Production Memory Monitoring

Effective memory optimization extends beyond development into production monitoring. Implement comprehensive monitoring to track memory metrics and detect issues before they impact users:

package main

import (

"context"

"fmt"

"log"

"net/http"

"runtime"

"sync"

"time"

)

// MemoryMonitor tracks and reports memory metrics

type MemoryMonitor struct {

metrics map[string]interface{}

mu sync.RWMutex

alertThresholds map[string]float64

lastGCTime time.Time

}

func NewMemoryMonitor() *MemoryMonitor {

return &MemoryMonitor{

metrics: make(map[string]interface{}),

alertThresholds: map[string]float64{

"heap_mb": 500, // Alert if heap > 500MB

"gc_pause_ms": 100, // Alert if GC pause > 100ms

"alloc_rate_mb_sec": 50, // Alert if allocation rate > 50MB/sec

},

lastGCTime: time.Now(),

}

}

func (mm *MemoryMonitor) CollectMetrics() {

var m runtime.MemStats

runtime.ReadMemStats(&m)

mm.mu.Lock()

defer mm.mu.Unlock()

// Calculate allocation rate

now := time.Now()

timeDiff := now.Sub(mm.lastGCTime).Seconds()

if timeDiff > 0 {

if lastAlloc, exists := mm.metrics["total_alloc_mb"]; exists {

allocDiff := float64(m.TotalAlloc/1024/1024) - lastAlloc.(float64)

mm.metrics["alloc_rate_mb_sec"] = allocDiff / timeDiff

}

}

// Core metrics

mm.metrics["heap_mb"] = float64(m.HeapAlloc / 1024 / 1024)

mm.metrics["heap_sys_mb"] = float64(m.HeapSys / 1024 / 1024)

mm.metrics["total_alloc_mb"] = float64(m.TotalAlloc / 1024 / 1024)

mm.metrics["gc_cycles"] = m.NumGC

mm.metrics["next_gc_mb"] = float64(m.NextGC / 1024 / 1024)

mm.metrics["gc_cpu_fraction"] = m.GCCPUFraction

// GC pause metrics

if m.NumGC > 0 {

// Get the most recent GC pause

recentPause := m.PauseNs[(m.NumGC+255)%256]

mm.metrics["last_gc_pause_ms"] = float64(recentPause) / 1000000

}

mm.lastGCTime = now

}

func (mm *MemoryMonitor) CheckAlerts() []string {

mm.mu.RLock()

defer mm.mu.RUnlock()

var alerts []string

for metric, threshold := range mm.alertThresholds {

if value, exists := mm.metrics[metric]; exists {

if floatValue, ok := value.(float64); ok && floatValue > threshold {

alerts = append(alerts, fmt.Sprintf(

"ALERT: %s = %.2f exceeds threshold %.2f",

metric, floatValue, threshold))

}

}

}

return alerts

}

func (mm *MemoryMonitor) GetMetrics() map[string]interface{} {

mm.mu.RLock()

defer mm.mu.RUnlock()

result := make(map[string]interface{})

for k, v := range mm.metrics {

result[k] = v

}

return result

}

// MetricsHandler provides HTTP endpoint for metrics

func (mm *MemoryMonitor) MetricsHandler(w http.ResponseWriter, r *http.Request) {

mm.CollectMetrics()

metrics := mm.GetMetrics()

alerts := mm.CheckAlerts()

w.Header().Set("Content-Type", "application/json")

fmt.Fprintf(w, "{\n")

fmt.Fprintf(w, ` "timestamp": "%s",`+"\n", time.Now().Format(time.RFC3339))

fmt.Fprintf(w, ` "metrics": {`+"\n")

first := true

for k, v := range metrics {

if !first {

fmt.Fprintf(w, ",\n")

}

fmt.Fprintf(w, ` "%s": %v`, k, v)

first = false

}

fmt.Fprintf(w, "\n },\n")

fmt.Fprintf(w, ` "alerts": [`)

for i, alert := range alerts {

if i > 0 {

fmt.Fprintf(w, ", ")

}

fmt.Fprintf(w, `"%s"`, alert)

}

fmt.Fprintf(w, "]\n}\n")

}

func main() {

monitor := NewMemoryMonitor()

// Start memory monitoring

go func() {

ticker := time.NewTicker(5 * time.Second)

defer ticker.Stop()

for range ticker.C {

monitor.CollectMetrics()

alerts := monitor.CheckAlerts()

if len(alerts) > 0 {

log.Println("Memory alerts:")

for _, alert := range alerts {

log.Printf(" %s", alert)

}

}

}

}()

// Set up HTTP endpoints

http.HandleFunc("/metrics", monitor.MetricsHandler)

http.HandleFunc("/health", func(w http.ResponseWriter, r *http.Request) {

monitor.CollectMetrics()

alerts := monitor.CheckAlerts()

if len(alerts) > 0 {

w.WriteHeader(http.StatusServiceUnavailable)

fmt.Fprintf(w, "Unhealthy: %d alerts\n", len(alerts))

return

}

w.WriteHeader(http.StatusOK)

fmt.Fprintf(w, "Healthy\n")

})

// Start simulated load for demonstration

go func() {

var data [][]byte

ticker := time.NewTicker(100 * time.Millisecond)

defer ticker.Stop()

for range ticker.C {

size := 1024 * (len(data)%100 + 1)

chunk := make([]byte, size)

data = append(data, chunk)

if len(data) > 1000 {

data = data[500:]

runtime.GC()

}

}

}()

log.Println("Memory monitor running on :8080")

log.Println("Endpoints: /metrics, /health")

log.Fatal(http.ListenAndServe(":8080", nil))

}

Setting Up Production Alerts

Configure monitoring systems to track key memory metrics:

- Heap growth rate: Alert when heap grows faster than expected

- GC frequency: Monitor for increased garbage collection cycles

- Memory leaks: Track steady increases in heap usage over time

- GC pause times: Alert when pause times exceed SLA requirements

Advanced Optimization Techniques

Memory-Efficient Data Structures

Design data structures that minimize memory overhead and improve cache locality:

package main

import (

"fmt"

"runtime"

"unsafe"

)

// PackedStruct demonstrates struct field ordering for memory efficiency

type PackedStruct struct {

// Order fields from largest to smallest to minimize padding

largeField uint64 // 8 bytes

mediumField uint32 // 4 bytes

smallField1 uint16 // 2 bytes

smallField2 uint16 // 2 bytes

tinyField uint8 // 1 byte

boolField bool // 1 byte

// Total: 18 bytes (with minimal padding)

}

type UnpackedStruct struct {

// Poor field ordering causes padding

tinyField uint8 // 1 byte + 7 bytes padding

largeField uint64 // 8 bytes

boolField bool // 1 byte + 3 bytes padding

mediumField uint32 // 4 bytes

smallField1 uint16 // 2 bytes + 6 bytes padding

smallField2 uint16 // 2 bytes + 6 bytes padding

// Total: 32 bytes (with excessive padding)

}

// BitSet demonstrates compact boolean storage

type BitSet struct {

bits []uint64

size int

}

func NewBitSet(size int) *BitSet {

numWords := (size + 63) / 64 // Round up to nearest 64

return &BitSet{

bits: make([]uint64, numWords),

size: size,

}

}

func (bs *BitSet) Set(index int) {

if index < 0 || index >= bs.size {

return

}

wordIndex := index / 64

bitIndex := index % 64

bs.bits[wordIndex] |= 1 << bitIndex

}

func (bs *BitSet) Get(index int) bool {

if index < 0 || index >= bs.size {

return false

}

wordIndex := index / 64

bitIndex := index % 64

return (bs.bits[wordIndex] & (1 << bitIndex)) != 0

}

func (bs *BitSet) MemoryUsage() int {

return len(bs.bits) * 8 // 8 bytes per uint64

}

// StringInterner reduces memory usage for duplicate strings

type StringInterner struct {

strings map[string]string

}

func NewStringInterner() *StringInterner {

return &StringInterner{

strings: make(map[string]string),

}

}

func (si *StringInterner) Intern(s string) string {

if interned, exists := si.strings[s]; exists {

return interned

}

si.strings[s] = s

return s

}

func demonstrateStructPacking() {

packed := PackedStruct{}

unpacked := UnpackedStruct{}

fmt.Printf("Struct size comparison:\n")

fmt.Printf(" PackedStruct: %d bytes\n", unsafe.Sizeof(packed))

fmt.Printf(" UnpackedStruct: %d bytes\n", unsafe.Sizeof(unpacked))

fmt.Printf(" Space savings: %.1f%%\n",

100.0 * (1.0 - float64(unsafe.Sizeof(packed))/float64(unsafe.Sizeof(unpacked))))

}

func demonstrateBitSet() {

size := 10000

// Compare BitSet vs []bool

bitSet := NewBitSet(size)

boolSlice := make([]bool, size)

fmt.Printf("\nBoolean storage comparison for %d booleans:\n", size)

fmt.Printf(" BitSet: %d bytes\n", bitSet.MemoryUsage())

fmt.Printf(" []bool: %d bytes\n", len(boolSlice))

fmt.Printf(" Space savings: %.1f%%\n",

100.0 * (1.0 - float64(bitSet.MemoryUsage())/float64(len(boolSlice))))

}

func main() {

fmt.Println("Memory-Efficient Data Structures Demo\n")

demonstrateStructPacking()

demonstrateBitSet()

var m runtime.MemStats

runtime.ReadMemStats(&m)

fmt.Printf("\nCurrent heap: %d KB\n", m.HeapAlloc/1024)

fmt.Println("\n💡 Key principles for memory-efficient data structures:")

fmt.Println(" - Order struct fields from largest to smallest")

fmt.Println(" - Use smaller integer types when possible")

fmt.Println(" - Pack boolean values using bitsets")

fmt.Println(" - Pre-allocate slices with known capacity")

fmt.Println(" - Use string interning for duplicate strings")

}

Custom Memory Allocators for Specialized Use Cases

For applications with specific allocation patterns, custom allocators can provide significant performance benefits:

package main

import (

"fmt"

"runtime"

"sync"

"unsafe"

)

// FixedSizeAllocator manages fixed-size memory blocks efficiently

type FixedSizeAllocator struct {

blockSize int

blocks []unsafe.Pointer

freeBlocks []unsafe.Pointer

mu sync.Mutex

allocated int

maxBlocks int

}

func NewFixedSizeAllocator(blockSize, maxBlocks int) *FixedSizeAllocator {

return &FixedSizeAllocator{

blockSize: blockSize,

blocks: make([]unsafe.Pointer, 0, maxBlocks),

freeBlocks: make([]unsafe.Pointer, 0, maxBlocks),

maxBlocks: maxBlocks,

}

}

func (fsa *FixedSizeAllocator) Allocate() unsafe.Pointer {

fsa.mu.Lock()

defer fsa.mu.Unlock()

// Try to reuse a free block first

if len(fsa.freeBlocks) > 0 {

ptr := fsa.freeBlocks[len(fsa.freeBlocks)-1]

fsa.freeBlocks = fsa.freeBlocks[:len(fsa.freeBlocks)-1]

return ptr

}

// Allocate new block if under limit

if fsa.allocated < fsa.maxBlocks {

// Allocate raw memory

ptr := unsafe.Pointer(&make([]byte, fsa.blockSize)[0])

fsa.blocks = append(fsa.blocks, ptr)

fsa.allocated++

return ptr

}

return nil // No more blocks available

}

func (fsa *FixedSizeAllocator) Free(ptr unsafe.Pointer) {

fsa.mu.Lock()

defer fsa.mu.Unlock()

// Add to free list for reuse

fsa.freeBlocks = append(fsa.freeBlocks, ptr)

}

func (fsa *FixedSizeAllocator) Stats() (allocated, free, total int) {

fsa.mu.Lock()

defer fsa.mu.Unlock()